When most people think of Snowflake, its powerful data platform capabilities likely come to mind – storing, managing, and analyzing vast amounts of data with ease. However, Snowflake’s potential extends far beyond data storage. It also enables the creation of fully automated, end-to-end data pipelines without relying on external tools.

In this post, we’ll explore how Snowflake’s native features – such as Snowpipe, dynamic tables, streams, tasks, stored procedures, and external access integrations – combine to create seamless automation workflows. We’ll illustrate this through a real-world use case: how DAS42 helped a leading managed service provider’s service assurance team automate critical alert management processes. Through the use of Snowflake’s automation capabilities, we transformed a time-consuming, manual workflow into a streamlined, scalable solution that improves operational efficiency and customer experience.

Let’s dive into how these Snowflake objects work together to build an automated pipeline and drive business value.

Use Case

DAS42 worked closely with a large managed services provider. A big part of their product is service assurance and ensuring their customer’s devices stay online and connected. When a customer’s device goes down, that device immediately sends out an alert to an AI Ops platform. The platform focuses on improving incident management and monitoring. It has functionalities related to alert enrichment and compression which are designed to help manage a large volume of alerts. AI Ops enriches raw alerts with additional context and metadata such as customer details, location, and time of the alert, making them more informative and actionable. Leveraging this enriched data, it intelligently compresses related alerts by grouping them into incidents, providing the Service Assurance team with a unified view of the events occurring at a site. Typically, the Service Assurance team’s first course of action when a new alert comes in is to contact the customer directly and confirm if the power at that site is up or down. This process takes time and isn’t exactly scalable. However, with the use of a power outage intelligence service and Snowflake’s automation capabilities, we are able to automate the entire process of confirming power thereby driving efficiencies within the Service Assurance process and improving the customer experience.

These are the Snowflake objects that make this possible:

- Snowpipe: for detecting when there are new files in a stage and ingesting them as soon as they’re available

- Dynamic tables: for detecting when that data is ingested and transforming it to a structured format

- Streams: for tracking changes to the dynamic tables and triggering tasks when there are new inserts

- Triggered tasks: for calling a stored procedure that performs some action when there are new inserts to a table

- Stored procedure: to specify what action to take when the task gets triggered

- External access integrations (optional): for being able to make external calls from Snowflake, if that is the action the stored procedure should take

Solution

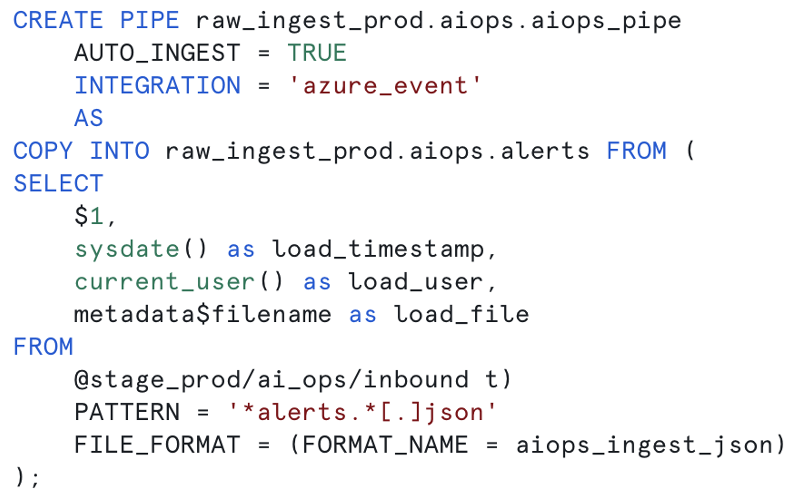

We have configured alert data from AI Ops to land in our Azure storage container in real time. We leverage Snowpipe to detect when new files in the external stage land, and then ingest the semi-structured data into a table in Snowflake.

To do this, we use a notification integration in Snowflake to allow the platform to interact with a third-party messaging service. In this case, we are using Azure Storage Queue.

In summary, the combination of the notification integration and Snowpipe detects when there are new alert files in Azure and then copies the semi-structured data from those files into a Snowflake table.

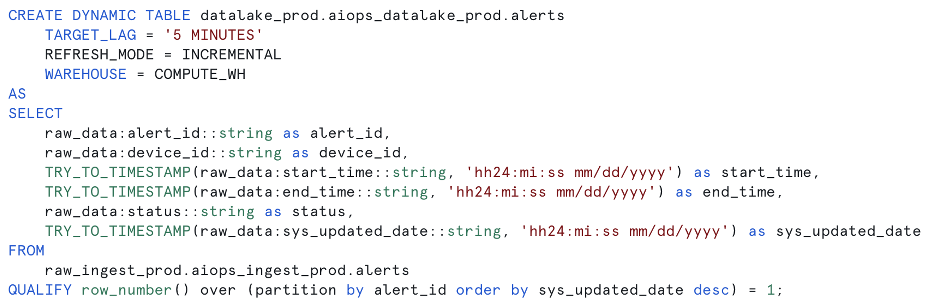

Once the raw data lands in Snowflake, we provision a dynamic table that is dependent on the raw table to automate the process of unpacking the JSON and performing any other required transformations to the data. Dynamic tables are particularly useful for automation use cases because they are able to detect changes to their upstream tables and automatically implement those.

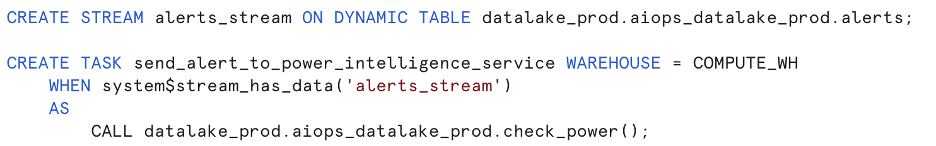

Now we can create a stream of that dynamic table. Streams record DML changes made to tables and metadata about the changes that were made. New records in a stream can be used to trigger tasks and automatically take action on new data. In this case, we use a stream to trigger a task that runs a stored procedure.

The task runs a stored procedure that makes a request to a power outage intelligence API. The API provides intelligence regarding the power status at a specific location. Before we can make an API call directly from Snowflake, we have to set up an external access integration which requires a few additional Snowflake objects.

The first is a network rule which allows you to make an outgoing request from Snowflake and specify the domain that you’re allowed to make the request to. The second object is a secret which allows you to store API tokens and credentials securely.

Snowflake has support for several authentication methods. In this use case, we simply use a ‘generic string’ secret to store a string token. The final object is the external access integration which configures the external connection using the network rule we created and any secrets.

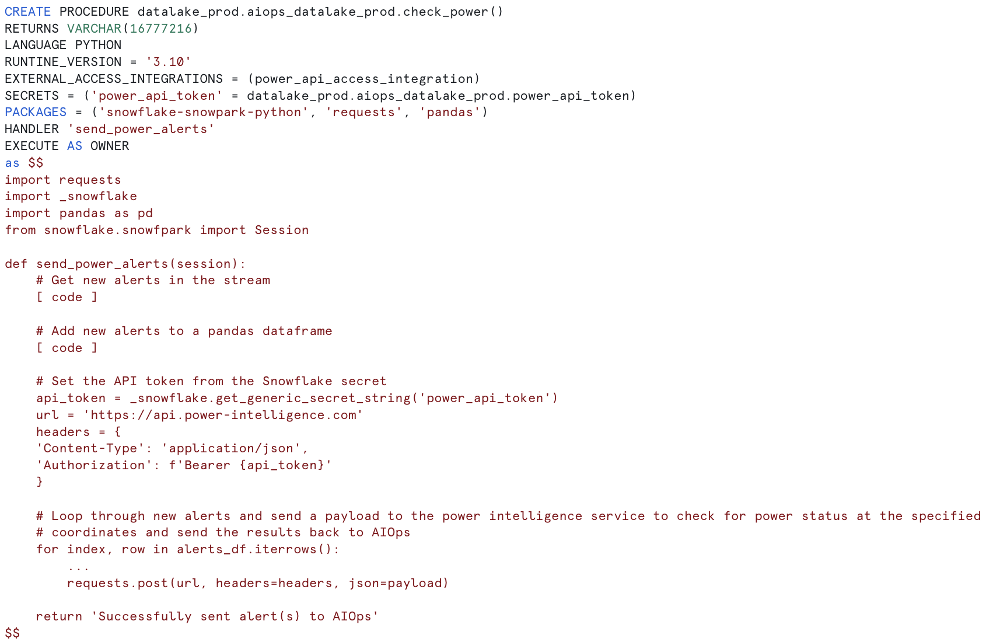

Now we can use the stream and external access integration to write the stored procedure that makes the API call to the third party service.

The stored procedure sends the power status back to AI Ops as an alert. AI Ops uses the device information we sent it to enrich the alert with customer and location details which allows it to compress with the original alert that triggered this pipeline. The Service Assurance team will now be able to see the power status at that location within minutes of the initial alert occurring and this eliminates the need to contact their customers directly.

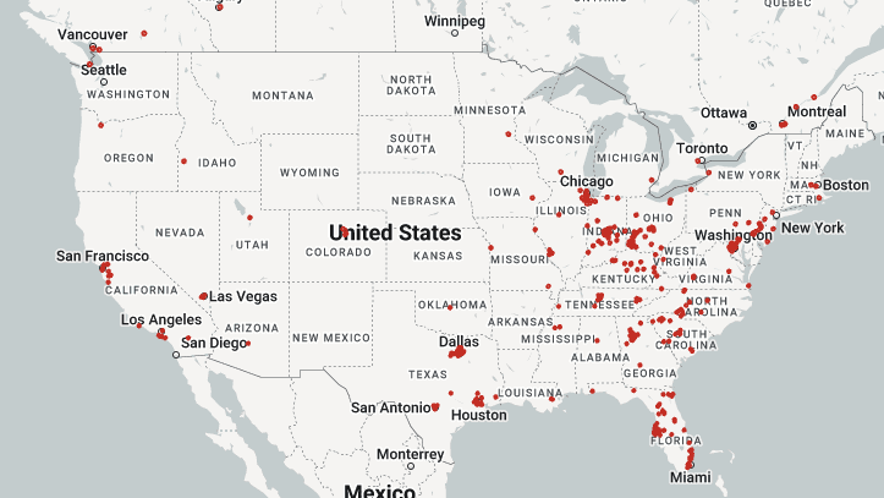

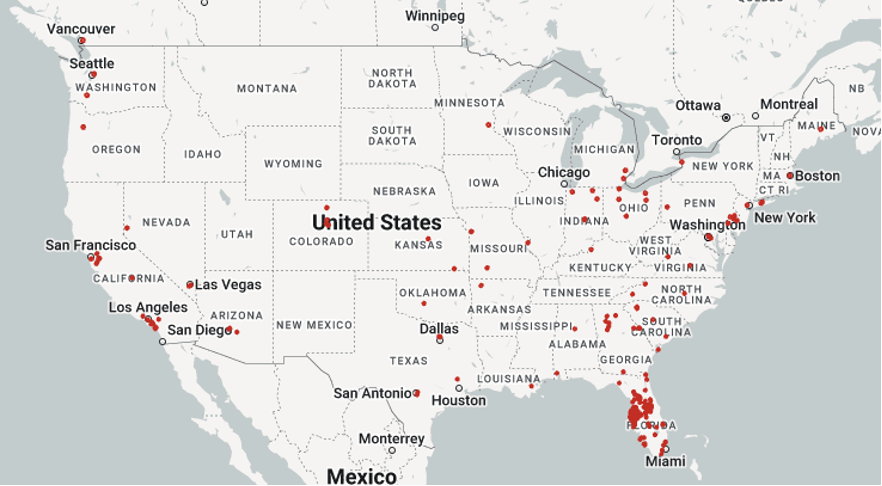

The maps below show what those power outage alerts looked like across the US during the recent hurricanes that affected the southeast: Hurricane Helene and Hurricane Milton.

Hurricane Helene

Hurricane Milton

Conclusion

By leveraging features like Snowpipe for continuous data ingestion, dynamic tables for automated transformations, and streams and tasks for event-driven orchestration, organizations can centralize their pipeline management and reduce their time to valuable insights. Snowflake’s all-in-one capability unlocks countless opportunities for automation.

Contact us to explore how DAS42 can unlock the full potential of Snowflake at your organization. Our mission is to simplify your operations and reduce manual effort so you can focus on driving your business forward.

Services provided